A/B Testing Guide: Comprehensive Techniques for Digital Marketers

Author & Editor

Founder & CEO

Published on: Oct 11, 2023 Updated on: May 16, 2024

With an increasing number of users migrating online, online advertisers face the growing challenge of catering to the needs of their expanding customer base. How does one keep up with this demanding shift? The answer lies in efficient, data-driven strategies.

At the heart of these strategies is A/B split testing, a powerful yet simple method for experimentation. This technique allows businesses to derive more meaningful and cost-efficient results from their campaigns and ensures that strategies are tailored to achieve the best outcomes.

As a data-driven digital marketing agency, A/B split testing benefits our website optimization projects and extends its advantages to campaigns we manage as a pay-per-click (PPC) agency.

If you are still getting familiar with the nuances of A/B split testing, this comprehensive A/B testing guide will illuminate the concept. Ensuring you can seamlessly integrate it into your digital marketing strategies.

What is A/B testing?

A/B testing, also known as split testing, is a digital marketing strategy that compares versions of an ad, app, email, or website to see which version performs better. Through this strategy, you can optimize your conversion rate and discover ways to drastically improve your marketing performance.

Its versatility is what makes A/B testing a darling among digital marketers. Whether you're deliberating on the optimal design for a sales landing page, experimenting with varied social media content formats, or iterating ad copies to find the perfect pitch, split testing provides the data-backed insights to benefit and refine your approach.

The compass directs you toward the tweaks and changes that could amplify your paid marketing ventures. As we delve deeper into the subsequent sections, you'll better understand how to integrate A/B testing into your marketing strategies effectively and the myriad benefits it brings.

Benefits of A/B testing in digital marketing today

As the dynamics of user interactions and platform algorithms have taken new turns, understanding the benefits of this A/B testing guide in digital marketing becomes even more essential. Here are six core benefits that underscore its significance the benefits of A/B testing in growth marketing:

1. Improved user engagement

A/B testing tailors user experiences to audience preferences. Experimenting with variations ensures engagement at every level, from awareness to conversion. This dynamic approach ensures that your content resonates with your audience, bolstering meaningful interactions.

2. Revamped content, ads, and campaigns

The ever-changing digital realm necessitates regular content and strategy refreshes. A/B testing lets you hone in on compelling content, equipping copywriters and creatives with actionable insights. This technique refines your campaigns by integrating the latest copywriting trends, tweaking design elements, or experimenting with new messaging.

3. Risk mitigation with validated optimizations

The trial-and-error essence of A/B testing means every strategy is validated. The risks associated with launching new campaigns are substantially reduced by extensively experimenting.

4. Boosted conversion rates and sales

With changing consumer behaviors in 2023, knowing what clicks with your audience is imperative. A/B testing offers those insights. You're better poised to elevate conversion rates and capitalize on PPC ecommerce opportunities by identifying what works.

5. Ease of analysis

In an age overwhelmed with data, streamlined analysis is a boon. A/B testing provides structured datasets, simplifying the analytical process. It becomes straightforward to discern which strategies outperform and should be carried forward.

6. Cost-efficiency

One of the less-emphasized yet crucial benefits of A/B testing is its cost-effectiveness. Businesses can allocate resources more efficiently by identifying what truly works, ensuring every dollar spent yields maximum return of investment (ROI).

Remember, even minute improvements can lead to substantial results. A/B testing arms you with insightful data, setting the stage for refining and elevating your marketing strategies.

Having understood the myriad benefits of A/B testing, it's pertinent to delve deeper into its significance. Let's pivot to understanding how essential the AB Split testing is in marketing nowadays.

Importance of A/B testing in marketing

A/B split testing gives marketers a data-driven methodology to refine strategies, validate assumptions, and improve conversion rates. However, to truly appreciate its value, one must understand its primary goals, industry-specific applications, and the recommended frequency for conducting these tests.

What is the primary goal of A/B split testing?

At its core, A/B testing is about comparison. It's a method to compare two versions of a webpage, email, ad, or other digital asset against each other to determine which performs better in achieving a specific goal.

This goal could range from enhancing click-through rates to increasing sales or any other measurable metric. The overarching aim is to gather data-backed insights to make informed decisions, eliminating guesswork and reducing the risk of unproductive changes.

Are there any industry-specific applications for A/B testing?

Certainly! While the concept of A/B testing is universally applicable, its execution can vary based on industry.

- Ecommerce -Retailers often use A/B tests to optimize product listings, refine checkout processes, or experiment with pricing strategies.

- SaaS (Software as a Service) - Companies might test different onboarding processes, dashboard designs, or feature placements to enhance the user experience and increase subscription rates.

- Publishing - Media houses or bloggers can apply A/B tests to headlines, content layouts, or subscription pop-ups to boost reader engagement and sign-ups.

- Financial Services - Banking and finance platforms might test form designs, service offerings, or promotional messages to maximize customer acquisitions.

Every industry, from healthcare to entertainment, can tailor A/B testing methods to meet their unique objectives.

How often should A/B tests be conducted?

The frequency of A/B testing depends on various factors, including the website's traffic, the changes being tested, and the desired statistical significance. However, a general guideline is to run the test until you have enough data to make a statistically confident decision, typically a few weeks for most businesses. Regularly conducting A/B tests is advisable as consumer behavior, technological trends, and market dynamics evolve, necessitating continual optimization.

Step-by-step guide to A/B testing

Today, A/B testing remains an indispensable tool for marketers aiming to sharpen their strategies and elevate campaign outcomes. Whether fine-tuning a landing page or adjusting a PPC ad, A/B testing provides the clarity needed to make informed decisions.

This A/B testing guide will help you execute A/B testing for all your pay-per-click (PPC) campaigns. Take a look at this quick rundown of the essential steps:

- Know your test objectives

- Define your "control" and "challenger"

- Know what variables to test for your PPC campaign

- Prioritize your tests

- Formulate a hypothesis

- Calculate sample size and duration

- Ensure accurate tracking

- Run a campaign for A/B testing

- Analyze the results

- Connect the results back to your goal

- Implement the winning version.

- Optimize and iterate

Excited to learn more? Check out the rest of these comprehensive steps to this A/B testing data science method.

1. Know your test objectives

Every test begins with a clear objective. What's the specific outcome you're aiming for? This could be boosting click-through rates, increasing conversions, or refining user engagement. Establish key performance indicators (KPIs) like impressions, clicks, or modifications to guide the testing process.

2. Define your "control" and "challenger"

Your "control" is the existing version, while the "challenger" is the new variation you wish to test. This distinction forms the foundation of A/B testing, allowing you to precisely identify which changes yield improvements.

3. Know what variables to test for your PPC campaign

With numerous platforms available, it's essential to pinpoint the precise variables relevant to your campaign. Whether it's Google's networks, social media platforms like Facebook and Instagram, or emerging platforms like X, understanding platform-specific nuances is critical.

The following are common platforms with testable and optimizable variables for your PPC campaigns:

A. Google Search Network

When you search something on Google, you’re often shown Google search network ads at the top or bottom of your search results page. By experimenting with your ad elements, you can increase your established conversion rate. Adwords elements can include:

- Headlines 1, 2, and 3;

- Descriptions 1 and 2;

- Display URL (path), and;

- Call-to-action (CTA).

B. Google Display Network

Google also displays visual marketing materials throughout its Google Display Network. Some common testable variables include:

- Headline;

- Sub-headline;

- Copy;

- Product value;

- Subject;

- Background;

- Common elements, and;

- CTA.

C. Google Shopping Network

Google can show your products on its Google Shopping Network, which works similarly to the previously mentioned ad networks. Google will promote any and all of your products, while you only pay when your customers click.

TIP: You can check out our full Google ad optimization guide for a full understanding of the process you can use.

D. Facebook and Instagram

These two social media platforms have a thriving marketing network for all your promotional needs. Since they have similar business networks, here’s an aggregated list of elements you can experiment with when conducting split tests:

- Placement;

- Audiences;

- Demographics;

- Text;

- Images;

- Headlines;

- Copy;

- Product value;

- Background, and;

- CTA.

Though both social media platforms share the same Ad Manager system, do note that Instagram focuses on more visual marketing. Facebook, meanwhile, has other options that can be more text-focused, which you can experiment with later on. Best to check out our Facebook ad optimization guide as well for a full rundown of what you can do.

E. X (formerly Twitter)

Last but not least is X, a wildly popular social media application that has its own established business marketing network as well. If your business aims to establish a strong presence on this app, then some testable elements on X you can experiment with can include:

- Text/Tweets;

- Image;

- Video;

- Pre-roll views;

- Moments;

- Audiences;

- Demographics, and;

- CTA.

How do you know which one to test?

This is actually a good and common question we get — so, what comes first?

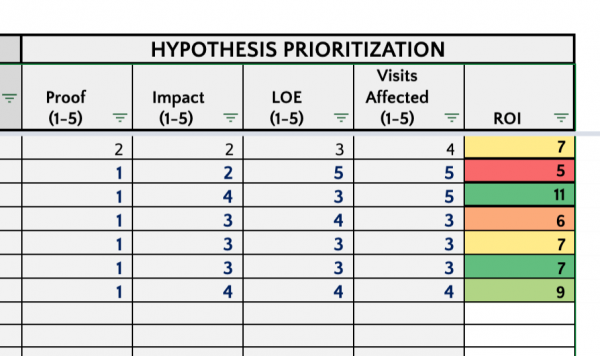

To know what variables to test first on any of these platforms, you can use the Value vs. Effort prioritization framework to find out if changes to your chosen variables will actually forward your overall metrics and goals.

Take for example our prioritization framework:

Once we have an optimization we want to try or test out, we take into consideration the sources of our claim, the Analytics support of where we can validate our claim, the estimated impact on our objective, and the overall effort or time a specialist needs to execute and implement.

Remember, this is just one efficient way to prioritize implementations to help you avoid analysis paralysis. You can try out different prioritization methods or develop your own based on urgent needs and current capacity.

4. Prioritize your tests

With countless elements to test, prioritization is key. Utilize frameworks like PIE or ICE if you are just starting your AB Testing and digital experimentation.

The PIE framework was popularized by the Widerfunnel team, which is incredibly easy to utilize. Potential, importance, and ease are the three ranking components that make up this framework, and they are all ranked from 1 to 10. It is easy and quick to use. Ideal for teams who are just getting started with low test velocity digital experimentation and AB testing.

The GrowthHackers team developed the ICE framework. It employs the same three ranking factors as PIE: impact, confidence, and ease. All three are evaluated from one to ten. It is easy to use and fast, just like PIE. It is beneficial for teams with modest testing programs or teams who are new to testing because of its simplicity of usage.

5. Formulate a hypothesis

Once you have your objective and specific control or challenger variables, you can now identify a potential hypothesis. This hypothesis should pose a problem or question that needs to be answered by your test.

This isn’t chosen randomly. You’ll need to refer to your past data from previous multivariate tests. This can include web analytics, user tests, heat maps, customer feedback, and past heuristic evaluations. Review your performance data to understand what has been achieved and what needs to be improved.

You can formulate a hypothesis by asking yourself what needs to be improved through your current process. Check out this example to phrase your own hypothesis:

Changing an element from A to B will increase/decrease a KPI. Of course, this isn’t a final solution to achieve your objectives. You still need to check your data, KPIs, and campaign objectives to formulate an effective hypothesis.

6. Calculate sample size and duration

Now that you have a hypothesis, you can calculate and set your sample size and duration. The minimum data recommendations according to Adalysis for most companies are:

- Low traffic: 350 impressions / 300 clicks / 7 conversions;

- Mid traffic: 750 impressions / 500 clicks / 13 conversions, and;

- High traffic: 1,000 impressions / 1,000 clicks / 7 conversions.

For advanced analysis, you can use a “Paired T-Test,” “Independent Sample T-Test,” “Wilcoxon- Signed Rank Test,” or “Mann- Whitney U Test” to see if there’s significance to your A/B testing findings.

If there are significant differences, then you can use the data for comparison. If there are no significant differences, you’ll need to set more data points for further analysis.

7. Ensure accurate tracking

Before launching your test, ensure the AB Testing tools and framework you are using is accurately set up. This ensures that every interaction gets captured, providing a wealth of data for analysis.

8. Run a campaign for A/B testing

Once you have everything in place, you can run your test campaign for your ad, landing page, copy, and more. Make sure all other variables are untouched to know how your experiments actually affect your final results. If not, you’ll render wildly different results, which is not part of your split testing best practices.

9. Analyze the results

You can now compare results between your control and challenger variables. Use your established KPIs to analyze the results and see if you achieved your objectives. You can further analyze your results and explore other hypotheses in succeeding tests.

10. Connect the results back to your goal

Interpret the results in the context of your initial objectives. Was the hypothesis validated? How does the winning variation align with broader marketing goals? With this analysis in mind, you can now declare a “winner” between your control and challenger, based on your objectives and KPI.

11. Implement the winning version.

Based on your analysis, roll out the winning version, the control or the challenger, to maximize benefits.

12. Optimize and iterate

Of course, this isn’t the end of your split testing journey. There will always be more ways to optimize your digital marketing, especially after you test just one variable. Optimize your elements and then embark on your analytical journey all over again.

A/B testing tips for 2023

Refining your approach can significantly amplify your outcomes as you dive deeper into A/B testing. Here are six A/B testing guide tips for you:

- Take it one step at a time. Avoid overwhelming yourself by testing multiple elements simultaneously. Focus on one variable, be it a headline, image, or call-to-action, to identify its impact.

- Test early, test often. The earlier you start your A/B testing, the better. Regular tests help you adapt to changes promptly and can offer richer data over time, aiding optimization.

- Embrace change. Consumer behaviors shift. Adapting to these shifts and being willing to adjust your strategies based on test results will keep you ahead.

- Never stop improving. Even after a successful test, there's always room for enhancement. Continuously seek potential areas for further optimization.

- Use technology to your advantage. 2023 offers advanced A/B testing tools that can automate processes, provide deeper insights, and enhance testing accuracy. Staying updated with these tools can give you an edge.

- Collaborate and share insights. A/B testing isn't just a marketer's tool. Sharing insights across departments, be it design, content, or sales, can lead to a cohesive and effective strategy.

Key takeaways

Harnessing the power of A/B testing effectively is essential for brands striving to optimize their digital strategies. As you navigate through the complexities of digital marketing, consider these fundamental insights:

- Embrace the idea of A/B testing. Think of it like tuning a guitar; get those digital strategies sounding just right based on what your audience loves.

- Rely on data. Don't sail on hunches. Let A/B testing's data navigate your course, steering you towards smart choices and clearer waters.

- Evolve and re-test. Digital trends are as fleeting as fashion. Stay updated, keep iterating, and tailor your strategies like the latest in-vogue ensemble for your audience. Always be in style!

As you integrate these principles into your marketing approach, remember that each test, each insight, pushes your brand one step closer to its audience. Should you have further inquiries or seek deeper insights, Propelrr is here to assist and engage with you.

For direct conversations or more topics of interest, feel free to message us on our official Facebook, X, and LinkedIn accounts. Let's chat!