Six-Point Priority Site Audit for Large Websites Post-Panda

Author & Editor

Founder & CEO

Published on: Dec 13, 2011 Updated on: Mar 19, 2025

Table of Contents

I believe that the Google Panda, the Great Purge is indeed making the internet a better source of information but to those huge websites hit by it, it is a different monster to deal with for them.

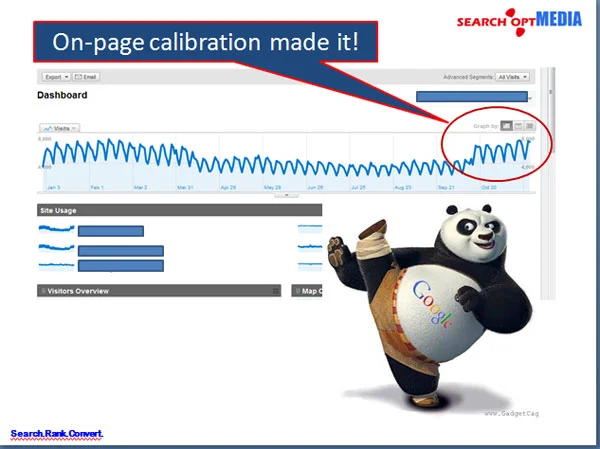

In most cases, SEO for huge websites on a post Panda update normally deals with its information architecture and by fixing the on-page structure brings huge improvement on traffic, bounce rate and SERPs.

However; having to deal with huge websites, web strategists or SEOs are always faced with time and limitation on which priorities are needed to be addressed first in order to see improvements on a less amount of time. Of course, the bosses will always want immediate results as if it should be available like “yesterday”.

So I prepared a Six-Point Priority Site Audit For Enterprise Websites as follows to help identify priorities:

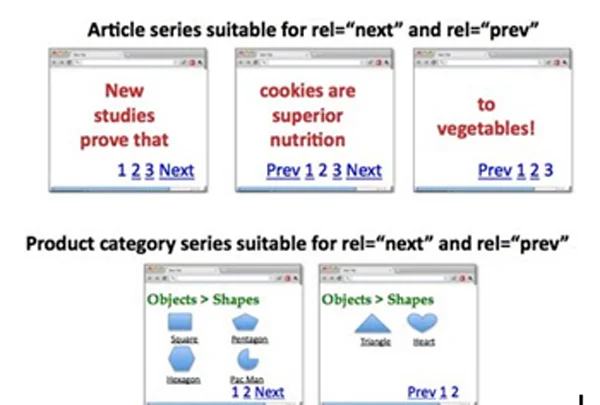

I. Address pagination pages indexation and link juice pass consolidation

Pagination is always a tricky stuff concerning large sites that often times is the result of faulty coding habits by developers who are not concerned with duplication of pages.

How to implement: rel=”prev” and rel=”next”?

Page 1: http://www.example.com/page1.html

<link rel=”canonical” href=”http://www.example.com/page1.html” />

<link rel=”next” href=”http://www.example.com/page2.html” />

Page 2: http://www.example.com/page2.html

<link rel=”canonical” href=”http://www.example.com/page2.html” />

<link rel=”prev” href=”http://www.example.com/page1.html” />

<link rel=”next” href=”http://www.example.com/page2.html” />

….

Last Page:

<link rel=”canonical” href=”http://www.example.com/lastpage.html” />

<link rel=”prev” href=”http://www.example.com/secondtothelastpage.html” />

Option:

The”view-all” page inclusion can be a good strategy too which we want Google to index than the component pages. We can do this using rel=”canonical” pointing to the view-all page. HOWEVER; if the latency of the pages is high then, better stick to the rel=”prev” and rel=”next” strategy.

II. Fix Meta Titles and Meta Descriptions duplication issues in GWT

To address the duplicate issues on Meta titles and descriptions in GWT, we need to re-code the titles and descriptions generated as such.

How to implement good Titles on paginated pages?

Page 1: Title here with keywords

Page 2: Page 2 of 4, Title here with keywords

Page 3: Page 3 of 4, Title here with keywords

In effect, we have:

<head>

<link rel=”canonical” href=”http://www.example.com/page2.html” />

<link rel=”prev” href=”http://www.example.com/page1.html” />

<link rel=”next” href=”http://www.example.com/page2.html” />

<title> Page 2 of 4, Title here with keywords</title>

</head>

How to implement a better and unique Meta Descriptions?

Page 2:

<title>Page 2 of 4, Title here with keywords</title>

<meta name=”description” content=”>Listings of 11-20 (out of 30) Title here with keywords” />

*Brand keywords on the title can be removed to accommodate other local or long tail keywords

Or

You can simply leave blank the Meta descriptions of the component pages to let the robots drive the traffic and weight to the top pages which we have always wanted.

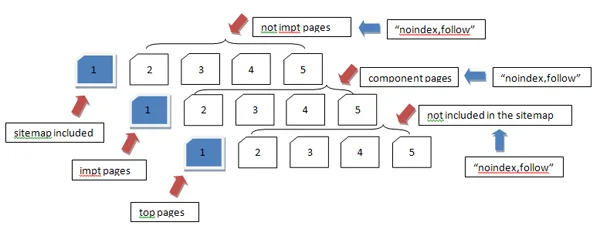

III. Sitemap resubmission of important pages to reinforce information retrieval prioritization and the use of “noindex,follow”

We are all aware that the resubmission of the sitemaps is for the prioritization of the most important pages of the site to be indexed and weighed properly by the search engines.

How to implement “noindex,follow”on the less important pages?

Insertion of the the <meta name=”robots” content=’noindex, follow’ /> or to be more specific for the Google Bots, use <meta name=”googlebot” content=’noindex, follow’ />

- Importantly, the use of “noindex,follow” to component pages (pages 2,3,etc) must be implemented to do away from nearly duplicate contents often interpreted by Google as thin contents.

- If the component pages for some set of pages are deemed needed then the “noindex,follow” can be removed

Take note that it is not a good practice to focus your efforts indexing ALL the pages of an enterprise website but instead, simply focus on the important pages where you can wanted to bring your audience to visit.

History:

The practice of creating hundreds of thousand pages or even millions of pages back then just to make Google believe that the website is an authority website is no longer applicable these days. PANDA will even make you cringe creating those “thin contents” on your website.

IV. Lower the BOUNCE RATE

BOUNCE RATE is an indicator of Google for site’s relevance to users on their search results. Propelrr® believes that this has quite a lot of impact on ranking these days. It could be not disregarded anymore.

How to address it?

Aim for page titles that relate to the content of the page. When someone clicks on a search result, they will at least have read the title. Now if your H1 is very different from that, chances are, people will leave your site right away, assuming it does not offer what they are looking for.

More so, the offer of good content relevant to the search result is really demanded.

How to implement?

Title, H1, Meta Descriptions and document copy should show relevant contents from search results.

IMPORTANT: Content Writers should take this seriously.

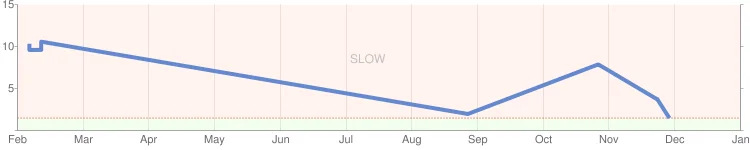

V. Increase SITE SPEED

Mayday, Caffeine and the PANDA updates are about user experience specifically on site speed. Again, this is based on the premise of giving excellent user experience on the website. The faster the site becomes, the longer the users stay on your site, the higher the return visits and everything will follow to be up the charts. This is what Google wants.

Site speed can be a server-side issue or an application-side issue. I have enumerated below the things you need to do to speed up your website.

How to test and identify specific “parts” of the website that are affecting heavily the site speed?

Tools to use:

- http://www.webpagetest.org

- http://www.websiteoptimization.com/services/analyze/

- http://tools.pingdom.com/

- https://addons.mozilla.org/en-US/firefox/addon/firebug/

- https://addons.mozilla.org/en-US/firefox/addon/YSlow/

- https://code.google.com/speed/page-speed/download.html

Propelrr® identified the above tools for site speed performance testing and calibration. #s 1-6 are good tools!

On the server-side issue , I would recommend the following below:

1. Use CDN – I think you are already using this.

2. Use Caching System

VI. Clean all 404 pages found in Google Webmaster Tools (GWT)

Request for removal of 404 pages on GWT as well as the pages linking to the 404 pages. You can use Xenu’s Link Sleuth to identify broken links and 404 pages. After such, fix them.

Having said all the priority issues on site auditing large websites, the result is tremendous!